7 Review

The best way to review for the midterm will be to read the notes in this book and to carefully go through the problem set questions.

All of the questions in the problem sets are designed to be straight-forward applications of the class material. Oftentimes they are just class examples with re-named variables. Try to think about what different parts of the examples are doing. Try to edit things and see if they still work. For ex. an example makes deciles of population density, what if I made deciles of income instead?

The midterm questions will similarly be straight-forward applications of class examples. There may be cases where things are presented in a new form or combine concepts, but if you have a grasp of the in-class and problem set code there will be absolutely nothing surprising about the midterm.

My other tips for the midterm are: knit your rmarkdown often so you don’t get stuck at the end; read every question fully before starting; pay attention to extra information I’ve provided; pay attention to the words I use in the questions and how those words relate to the course material; make sure to put something down for absolutely every question (I can’t give partial credit if you have literally 0 things written).

7.1 Broad view of the course

Here are some brief notes and examples to give a very broad view of what I hope for you to pick up in each chapter. This is designed to give you a sense of what I think each chapter is about and what I want you to learn. The following is definitely not sufficient for studying.

7.1.1 What is Data?

The focus of this chapter was to have you think through what the ultimate goals of data science and statistics are. In this class we are going to spend a lot of time manipulating individual datasets which is not the purpose of statistics. The purpose of statistics is to use the sample of data that we have to make inferences about broader populations. The way these inferences work is by understanding that our dataset is one of an infinite number of datasets that could exist, and therefore everything that we calculate has some degree of sampling variability. We want to make inferences about the likelihood of observing our data given assumptions about the truth and a measure of sampling variability.

7.1.2 Basic R

A broad understanding of why we are using R, and why the use of scripts is key for replicability.

Using R as a basic calculator.

4 + 2

#> [1] 6

2 + sqrt(4)

#> [1] 4- Creating objects, including individual numbers, vectors, and matrices.

- The use of functions to operate on those objects

mean(x)

#> [1] 3- The use of square brackets to access information stored in objects.

y[3]

#> [1] 3

dat[1,2]

#> z

#> 4

dat[1,]

#> y z

#> 1 4

dat[,1]

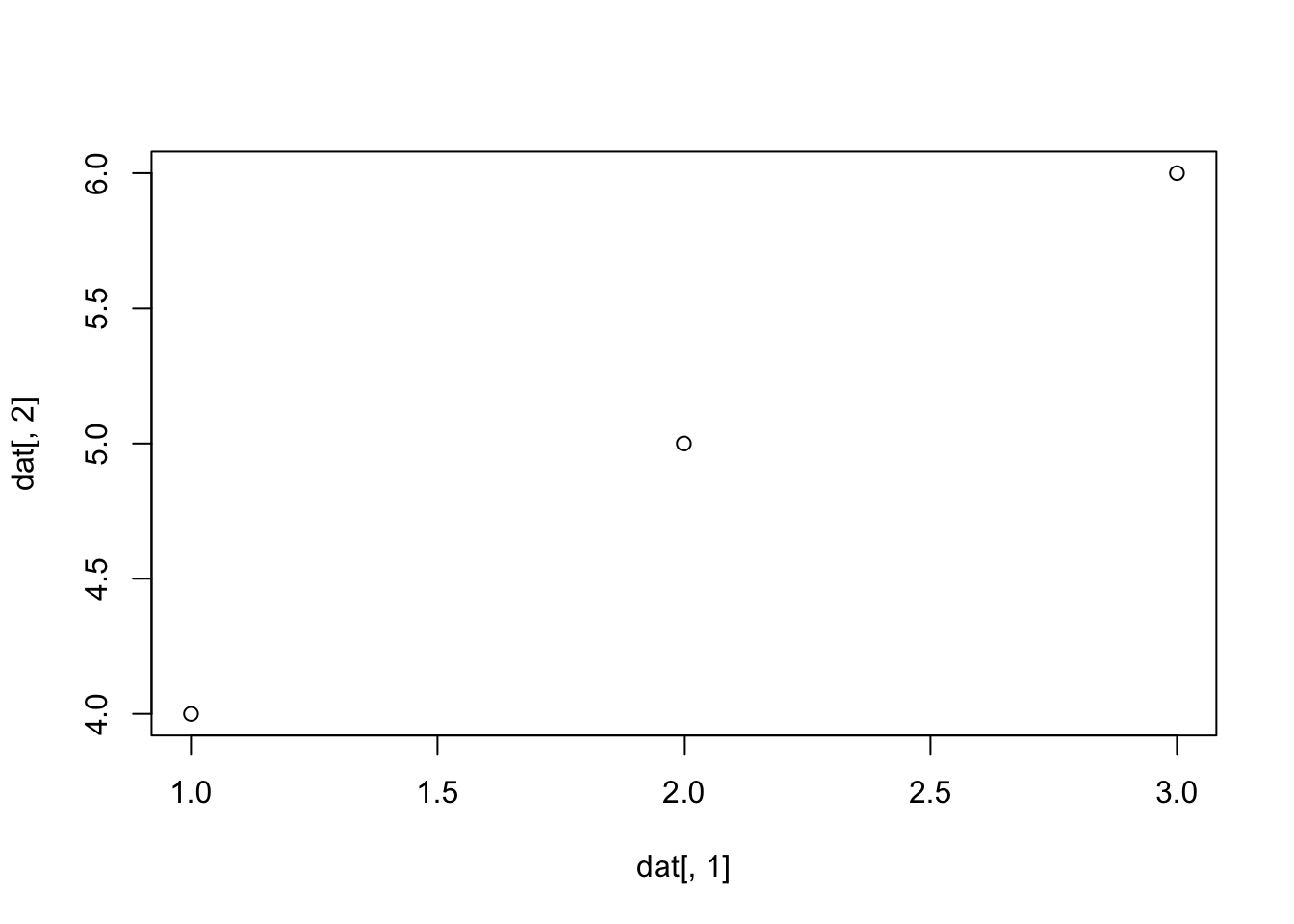

#> [1] 1 2 3- Basic plots including barplots, boxplots, and scatterplots.

plot(dat[,1], dat[,2])

7.1.3 Conditional Logic

- Using logical statements to create boolean variables (

==, >,<, !, %in%)

3>4

#> [1] FALSE

3==sqrt(9)

#> [1] TRUE- Using boolean variables to subset existing data.

dat[dat[,1]>1,]

#> y z

#> [1,] 2 5

#> [2,] 3 6- Stringing together multiple logical statemetns with

&, |.

3==3 & 3==4

#> [1] FALSE

3==3 | 3==4

#> [1] TRUE- Making plots with conditional logic.

7.1.4 Data Frames

acs <- rio::import("https://github.com/marctrussler/IDS-Data/raw/main/ACSCountyData.csv", trust=T)Understanding the use case for a data frame over a “sample” matrix created with

cbind()The use of named columns to access variables in a dataset.

head(acs$population)

#> [1] 102939 23875 37328 55200 208107 25782- Extending our knowledge of square brackets and conditional logic to data frames.

acs$population[acs$population>1E7]

#> [1] 10098052- The use of

which()to determine rows that match a condition.

which(acs$percent.transit.commute>40)

#> [1] 1785 1834 1855 1862 1872- Using conditional logic to recode variables.

acs$high.transit <- NA

acs$high.transit[acs$percent.transit.commute>30] <- 1

#Or

acs$high.transit <- acs$percent.transit.commute>30Understanding and preserving missing data.

Converting dates to the right class.

lubridate::ymd("2020-02-01")

#> [1] "2020-02-01"- Editing and cleaning character information.

gsub("_","","test_test")

#> [1] "testtest"

tolower("BBB")

#> [1] "bbb"

toupper("ccc")

#> [1] "CCC"

out <- separate(acs,

"county.name",

into = c("county","extra"),

sep=" ")

#> Warning in gregexpr(pattern, x, perl = TRUE): input string

#> 1805 is invalid UTF-8

#> Warning: Expected 2 pieces. Additional pieces discarded in 209 rows

#> [57, 68, 69, 70, 71, 73, 74, 76, 77, 79, 80, 81, 82, 83,

#> 84, 85, 87, 88, 89, 91, ...].

#> Warning: Expected 2 pieces. Missing pieces filled with `NA` in 1

#> rows [1805].

head(out$county)

#> [1] "Etowah" "Winston" "Escambia" "Autauga" "Baldwin"

#> [6] "Barbour"7.1.5 Cleaning and Reshaping

Using commands like

duplicatedto find errors/unwanted information in a datasetReshaping data using the

pivotcommands.Renaming variable names to properly formatted names.

7.1.6 For/If

The use of

for()loops to aggregate data to a higher level.The use of

for()loops to work on chunks of data in a dataset one at a time.The use of

for()loops to simulate probability.The use of double

for()loops to operate across two dimensions of clusters.The use of

if()statements to run code based on a condition.